Research Note: You made it all the way to week 8 of 8 for the ML syllabus. You can find the files from the syllabus being built on GitHub. The latest version of the draft in PDF form can be found here.

This lecture is going to be provided in two parts. First, I’m going to provide you with a few scholarly articles that dig into what MLOps involves and how researchers are addressing the topic. Second, I’ll provide you my insights on the topic of MLOps which I have been presenting for the last few years. When you get to the point of applying ML techniques in production you will end up needing MLOps.

MLOps research papers

Alla, S., & Adari, S. K. (2021). What is mlops?. In Beginning MLOps with MLFlow (pp. 79-124). Apress, Berkeley, CA. https://arxiv.org/pdf/2103.08942.pdf[Zugriffam09.09.2021

Zhou, Y., Yu, Y., & Ding, B. (2020, October). Towards mlops: A case study of ml pipeline platform. In 2020 International conference on artificial intelligence and computer engineering (ICAICE) (pp. 494-500). IEEE. https://www.researchgate.net/profile/Yue-Yu-126/publication/349802712_Towards_MLOps_A_Case_Study_of_ML_Pipeline_Platform/links/61dd00575c0a257a6fdd62f3/Towards-MLOps-A-Case-Study-of-ML-Pipeline-Platform.pdf

Renggli, C., Rimanic, L., Gürel, N. M., Karlaš, B., Wu, W., & Zhang, C. (2021). A data quality-driven view of mlops. arXiv preprint arXiv:2102.07750. https://arxiv.org/pdf/2102.07750.pdf

Ruf, P., Madan, M., Reich, C., & Ould-Abdeslam, D. (2021). Demystifying mlops and presenting a recipe for the selection of open-source tools. Applied Sciences, 11(19), 8861. https://www.mdpi.com/2076-3417/11/19/8861/pdf

My insights about MLOps

Conceptually, I have been breaking down the categories of applied ML deployment based use cases into three buckets:

Bucket 1: “Things you can call” e.g. external API services

Bucket 2: “Places you can be” e.g. ecosystems where you can build out your footprint (AWS, GCP, Azure, and many others that are springing up for MLOps delivery)

Bucket 3: “Building something yourself” e.g. open source and self tooled solutions

These buckets will impact your ability to run MLOps and how much control you have over the frameworks and underlying data pipes. Bucket one is the easiest to implement because all you have to do is go out and consume it. You just need to connect to it, send some information out to it, get some information back, and you're ready to go. Bucket two is really about places where you can be totally within an ecosystem where you can build out your footprint for the endeavor. AWS, Azure, and GCP and many others that are springing up for MLOps delivery. I do mean many others are ready to provide you an ecosystem. You should be starting to see other ecosystems become available besides the major three. They are popping up and they're going to provide a different workflow in a different place where you can serve up your ML models and to be able to get going in this space. Now the third category or bucket three is where you will be building something yourself. These are the open source and self-tooled solutions. A few years ago, this space was the primary place people were building and now we are seeing a shift. We're seeing that movement into other buckets. Those API based solutions are so readily available and you can get into these ecosystems where you can get going so quickly. Things are moving around and changing. That categorization of the three buckets helps me think about where things are for use cases and where things are gonna happen. It's a very tactical question versus strategic one.

Some of the major players within the information technology space are trying to break into the machine learning operations (or MLOps) space. Like anything else, picking the right tools to get things done is about matching the right technology and use case to achieve the best possible results. We are really starting to see some solid maturity in the MLOps space. The next stage will be either a round of purchasing where established players buy up the upstart players building MLOps or the established players will build out the necessary elements to move past the newer players in the enterprise level market.

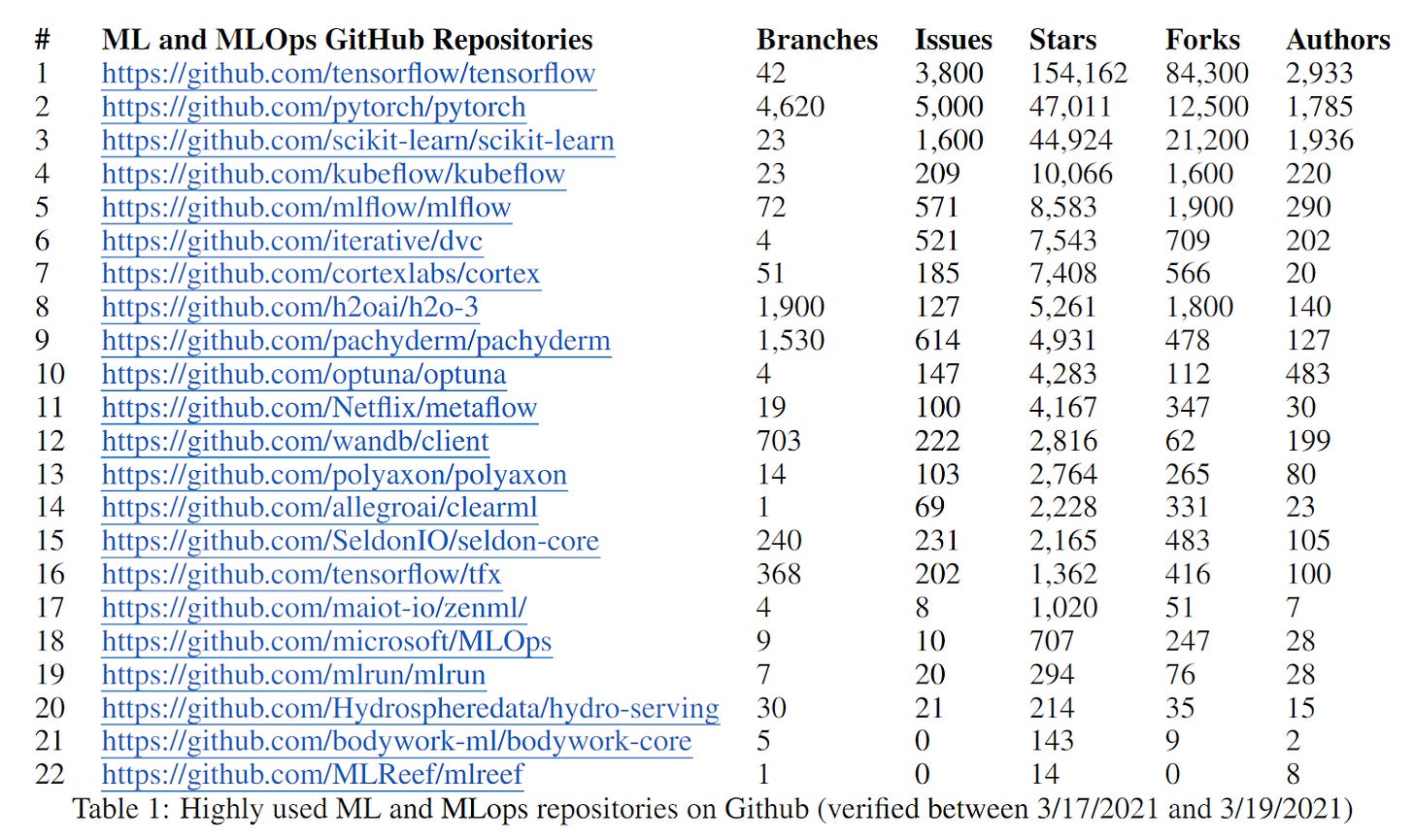

Let's look at the first technology in Table 1 which happens to be TensorFlow. You should not be surprised to see that TensorFlow has by far the largest influence at 154,162 stars. Getting a star requires a GitHub user to click the star function. People have really placed a lot of attention on TensorFlow. It has 2,933 contributors that means that almost 3,000 people are contributing to TensorFlow. From that point you can see that PyTorch drops off considerably. It's going from around 154k stars to just 47k stars. The number of contributors drops off significantly as well. Now, you're down to around 1,785. Now on the PyTorch example, they do have 4,620 branches which honestly I don't know why you would want to look at that many branches. No human wants to manage that many branches of anything. That is unmanageable in terms of iteration. You can see that scikit-learn has roughly 44,000 stars and has 1,936 contributors. So you can kind of see here that the three major projects that are out there for machine learning are definitely adopted. People are using them and they're making forks of it, they're making versions of it, and they're starting to really dig into it out in the wild of software development right now.

So now if we take it to the next level and look a little deeper in terms of what's happening with the MLOps part of it. You're gonna see a major drop-off. Remember TensorFlow had 154,162 stars. Now you're starting to see the number of stars drop off considerably. You're starting to see that number of stars at 10,000 or less. You are starting to see kubeflow, mlflow, and some of these things that you know are complex stuff like metaflow from Netflix and you're only gonna see 4,000 stars and each of these things is gonna have sub 500 contributors. We haven't seen everyone trying to implement MLOps swarm in and start using these things. One of the reasons for that rapid decline in interest has to be the previously described bucket 1 where you can just connect to an API and functionally someone else is running part of the day to day MLOps.

You probably noticed that the previous set of analysis was looking at data from 2021. I’m sure you wanted to see some updated data to see if things had changed significantly. I went back and reran the same table build to create a 2022 version. A few of the repositories changed order in terms of total stars, but for the most part things are relatively the same.

Links and thoughts:

“The Biggest Tech Divorce - WAN Show September 16, 2022”

“Everyone knows what YouTube is. Few know how it really works.”

“Websites are back: inside The Verge's redesign” (This is pure Nilay meta content)

Top 5 Tweets of the week:

What’s next for The Lindahl Letter?

Week 88: The future of publishing

Week 89: your ML model is not an AGI

Week 90: What is probabilistic machine learning?

Week 91: What are ensemble ML models?

Week 92: National AI strategies revisited

Week 93: Papers critical of ML

Week 94: AI hardware (RISC-V AI Chips)

Week 95: Quantum machine learning

I’ll try to keep the what’s next list forward looking with at least five weeks of posts in planning or review. If you enjoyed this content, then please take a moment and share it with a friend. If you are new to The Lindahl Letter, then please consider subscribing. New editions arrive every Friday. Thank you and enjoy the week ahead.